Rollercoaster sort: the best of the two worlds

Disclaimer: This article is part of a serie of articles about the Rust Voracious Radix Sort Crate. This article is about the Rollercoaster sort inside the Voracious Radix Sort Crate, not the crate itself. For each article, the prerequisite is to read the previous articles and to understand them.

- What is radixsort ?

- Voracious sort

- Diverting LSD sort

- Rollercoaster sort: The best of the two worlds

- Peeka sort: Multithreaded radix sort – TBA –

When we talk about radix sort, there are two teams. LSD radix sort team vs MSD radix sort team. One can argue that LSD radix sort are faster because all the histograms can be computed at once whereas MSD radix sort is recursive so it is possible to multithread it and it is O(1) space complexity, LSD radix sort is O(n) space complexity. They are both right. So let’s take the best of the two worlds, the LSD radix sort speed and the MSD radix sort recursivity while keeping the O(1) space complexity. The downside is that this sort is very efficient with very large arrays, but performs poorly with small arrays. After intensive testing, I noticed that this sort is very efficient for i32, i64 and f64 primitive types. Unless someone tells me that there is a faster sort for these primitive types, I really think this sort is the state of the art for i32, i64 and f64 primitive types. For signed integers, there is a heuristic to avoid converting the signed integer to an unsigned integer. This conversion is done because, actually, radix sorts can only sort unsigned integers. When radix sorts sort other types than unsigned integers, there is, under the hood, a conversion from the other type into the unsigned integers.

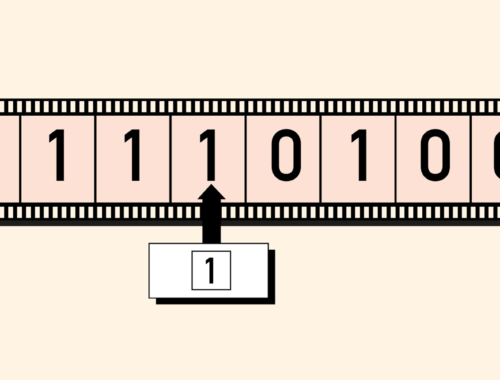

This sort is called « rollercoaster » because it starts with a MSD radix sort approach and then uses a DLSD radix sort approach and then, eventually, it uses again a MSD radix sort approach.

So far, I talk only about unstable radix sort. There is a stable radix sort in the Voracious radix sort crate, but I haven’t worked a lot on it. It is just a « naive » version of a stable radix sort. It is still a lot faster than the standard stable Rust sort().

Rollercoaster sort theory

What would be great if we could compute all the necessary historgrams at once and take into account only the minimum number of bits as it is done in the DLSD sort while using only O(1) space complexity. It would be even better if the sub array could fit inside the CPU cache and that we don’t need to convert signed integers into unsigned integers. What about the no free lunch theorem ? Sadly it still applies. Rollercoaster sort is O(1) space complexity, but there is a big constant. Several histograms can be computed at once, but not all of them. We don’t need to convert signed integers into unsigned integers, but we need to do it at least once. Once again it is a tradeoff. What is complicated is to choose the correct tradeoff to get the maximum efficiency.

Rollercoaster: The main idea is to start with a MSD radix sort approach, we compute a histogram on the most significant digits and we have buckets boundaries. If the bucket’s size is smaller than a constant experimentally found and since we know that LSD radix sort approach is faster than MSD radix sort approach, we switch to a DLSD sort on each bucket. So we can have several MSD passes and then, we switch to a DLSD radix sort. The DLSD radix sort can fallback on a MSD radix sort, hence the sort’s name.

Histograms: We have to compute one histogram for each MSD passes, and then several histograms at once for the DLSD radix sort. We compute less histograms than when we use only a MSD approach. You can notice that if the constant on which we switch to a DLSD radix sort is big, there are not many MSD passes. So the number of pass to compute histograms is almost the same as if there was only a DLSD approach, which computes less histograms than a pure LSD approach.

Space complexity: Since the DLSD is triggered only when a certain threshold is reached, it is O(1) space complexity. Depending on the threshold and the CPU cache, it may even fit into CPU cache. The threshold is still big but by an order of magnitude smaller than a DLSD or LSD approach.

Type conversion: To sort signed integers i32 or i64 with a radix sort, one has to convert them into unsigned integers. This is done by flipping the first bit. All the other bits are left untouched. Since the first MSD pass is done on the highest level, the other remaining level to sort with the DLSD approach does not need to convert remaining bits since nothing is done on them. After the MSD pass, each buckets are well sorted, there is still to sort elements inside each bucket. We can call another radix sort on the remaining level without flipping the first bit. That way, we spare a lot of useless computation.

A complicated fallback: When I said there is a DLSD fallback, it is actually a bit more complicated. The idea is still to use only the minimum number of bits but it comes with a cost, insertion sort cost or boundaries search cost and some other implementation details. Sometimes it is more efficient to use a LSD if there is not many passes left to do. Depending on the conditions, the fallback is a LSD radix sort or a DLSD radix sort.

Rollercoaster sort: example

I like to explain the theory with an example. I think it is easier to understand. This let’s use i8 instead of u8. As usual for the example, let’s choose a radix equals 2. The binary representation is done with the two complement method.

let mut array: Vec<i64> = vec![57, -32, -47, 18, 9, 5, -5, -60, 22, -17];

// In binary it is: [00111001, 11100000, 11010001, 00010010, 00001001,

// 00000101, 11111011, 11000100, 00010110, 11101111]The first pass is a MSD pass and since it is i8, we have to convert it first by flipping the first bit.

let mut array: Vec<i64> = vec![57, -32, -47, 18, 9, 5, -5, -60, 22, -17];

// In binary it is: [00111001, 11100000, 11010001, 00010010, 00001001,

// 00000101, 11111011, 11000100, 00010110, 11101111]

// Flip the first bit.

// With conversion: [10111001, 01100000, 01010001, 10010010, 10001001,

// 10000101, 01111011, 01000100, 10010110, 01101111]

// After the first MSD pass.

assert_eq!(array, vec![-32, -47, -5, -60, -17, 57, 18, 9, 5, 22])

// In binary it is: [01100000, 01010001, 01111011, 01000100, 01101111

// |________________________________________________|

// 01******

// 10111001, 10010010, 10001001, 10000101, 10010110]

// |________________________________________________|

// 10******As you can notice, the first MSD pass separates negative values from positive values. Once the first MSD pass is done, the remaining bits to sort are not transformed in anyway, so it is possible to cast the i8 into a u8 – or a i32 into a u32 or a i64 into a u64 – and sort will still be valid.

Now the first MSD pass is done, let’s say that we have reach the threshold to switch to the DLSD passes. I recall that:

nb_passes = ceil(log2(array.len()) / radix)

We have to apply this on each bucket – 01 and 10 – . For the first bucket 01: nb_passes = ceil(3 / 2) = 2, we need two DLSD passes and for the second bucket 10: nb_passes = ceil(3 / 2) = 2, we need two DLSD passes too.

// We start with:

assert_eq!(array, vec![-32, -47, -5, -60, -17, 57, 18, 9, 5, 22])

// In binary it is: [11100000, 11010001, 11111011, 11000100, 11101111

// 00111001, 00010010, 00001001, 00000101, 00010110]

// After the first DLSD pass on the first bucket 01:

assert_eq!(bucket01, vec![-32, -47, -60, -5, -17]);

// In binary it is: [11100000, 11010001, 11000100, 11111011, 11101111]

// |____________________________||________||________|

// ****00** ****10** ****11**

// After the first DLSD pass on the second bucket 10:

assert_eq!(bucket10, vec![18, 5, 22, 57, 9]);

// In binary it is: [00010010, 00000101, 00010110, 00111001, 00001001]

// |________||__________________||__________________|

// ****00** ****01** ****10**Now the second DLSD pass:

// After the second DLSD pass on the first bucket 01:

assert_eq!(bucket01, vec![-60, -47, -32, -17, -5]);

// In binary it is: [11000100, 11010001, 11100000, 11101111, 11111011]

// |________||________||__________________||________|

// **00**** **01**** ****10** **11****

// After the second DLSD pass on the second bucket 10:

assert_eq!(bucket10, vec![5, 9, 18, 22, 57]);

// In binary it is: [00000101, 00001001, 00010010, 00010110, 00111001]

// |__________________||__________________||________|

// **00**** **01**** **11****As you can see, after the second DLSD pass, the two buckets are sorted, hence the whole array. The insertion sort will only check that the bucket is well sorted, there won’t be any swap, so it is very fast.

assert_eq!(array, vec![-60, -47, -32, -17, -5, 5, 9, 18, 22, 57]);In this case, the required memory to sort the array was equivalent to half of the array – actually it depends on the threshold -. Histograms were computed twice, once for the MSD pass and once for the DLSD passes. We spare one histogram computation. There were three passes – one MSD and two DLSD – which were the minimum to fully sort the array, we spare one pass if we would have used a « naive » LSD approach. On two passes, we haven’t convert the i8 into u8. There was another improvement but not seen in this example, for positive values, there might be « empty » level – level with all the bit equal 0 -, which are skipped. This improvement is never done on signed integers.

To summarize, what was improved:

- Use of CPU cache if possible – it depends on the threshold –

- Use less memory – it depends on the threshold –

- Less passes on the whole array to compute histograms

- Use minimum amount of pass to sort the whole array, as it is with the DLSD approach

- Do not convert the signed integers into unsigned integers within the DLSD passes

- Skip empty levels for positive values

- Skip empty levels for negative values too, but it is very unlikely

One question remains, what is the « best » threshold. This is a very complicated question, because I think the best threshold depends on the CPU. In the crate, I set the threshold with respect to the CPU I was using to develop the Voracious radix sort. Since I have a Ryzen 3950x, I set the threshold to 128_000. Which is huge, but the Rollercoaster sort is very efficient with very big arrays. For smaller arrays, it fallbacks on other sorts.

All these improvements can be apply with f32 and f64. It is less likely to skip level with float, but for float that do not need to be transformed, it is possible to cast them into u32 or u64 and use the DLSD approach.

This is why the Rollercoaster sort performs very well on signed integers and floats. The downside, is that because the first pass is a MSD pass followed by DLSD passes, the array needs to be very big so that the sort is efficient. Radix sorts are known to perform poorly on small arrays and most of the radix sorts are implemented for unsigned integers only. Radix sorts are not design to sort something else than unsigned integers. With the Rollercoaster sort, signed integers and floats are well taken into account. For unsigned integers, DLSD sort is the fastest among all the radix sorts in the Voracious radix sort crate.

Benchmark

This will be done later. There are all the results in the Github repository.

Diverting LSD sort

Cookies aux graines et flocons d'avoine

Vous aimerez aussi

Implement PostgreSQL Pool connection in Rust

3 novembre 2022

What is radixsort ?

8 novembre 2020